Can you spot the problem in each of these comments?

// Remember to update the database row with the new status.

// Remember to remove(&cache, entityRef) before you destroy the entity!

// Remember to fulfill this promise with the result of the calculation.

The problem, of course, is that they rely on our fallible human memory!

We'd love a way to guarantee that we remember to do something. Unfortunately, today's compilers and languages can't really solve this problem for us.

But hark! We can change that, with Higher RAII, a technique where we use linear types that can only be destroyed in certain places.

What's a linear type?

If you look up linear types online, you'll find a lot of unhelpful definitions, like a linear type is a type that can't be aliased, can't be cloned, and must be "used" exactly once. 0

That's somewhat unhelpful because, as Vale shows us, you can have a linear struct without any of the above restrictions.

- You can read/modify it as much as you like.

- You can copy it.

- You can make aliases to it.

So for now, let's use a less correct but more helpful definition: A linear struct must eventually be explicitly destroyed. 1

In other words, a linear struct can't just go out of scope. When the user lets a linear struct go out of scope, the compiler gives an error.

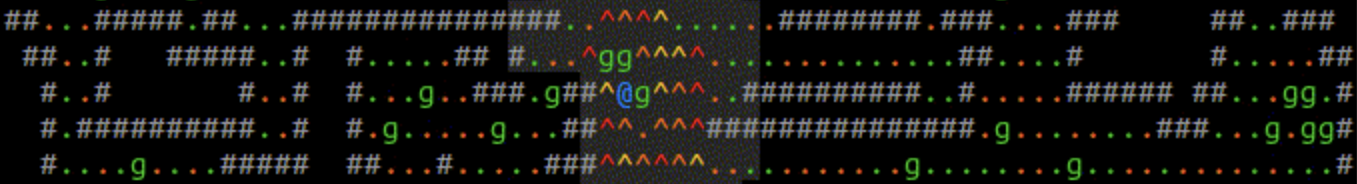

Here we have a function that makes a linear struct x, makes a reference to it, and even reads its contents.

When x goes out of scope, we get a compile error.

linear struct MyStruct {

m int;

}

func foo() {

// x is type MyStruct.

x = MyStruct(42);

// Can make a reference.

// r is type &MyStruct.

r = &x;

// Can read contents

println(r.m);

// ERROR: Undestructed linear `x`.

}

Other languages might automatically clean it up, or call a destructor or drop() method, but here we've opted into the compiler error instead.

If we put in the line destruct x;, it would resolve the compiler error.

This might seem like a weird restriction, but if we use it in a certain way, it can unlock some rather amazing capabilities:

- Keep your caches consistent with your data

- Prevent zombie temporary states

- Prevent concurrency bugs and ensure messages are handled

- Help with database consistency; prevent forgotten updates

- Prevent certain kinds of memory leaks (even GC or Rust leaks!)

- Prevent accidentally canceling an ongoing thread or connection

- Prevent an accidental rollback of a perfectly good transaction

- Guarantee a provided callback is actually called

- Guarantee we eventually log something

So how can linear types help with all of that?

The answer is something I call Higher RAII, explained in the next section.

By the way, if linear types intrigue you, also check out this Developer Voices interview where I talked with Kris Jenkins about them:

At time 15:48 I talked a bit about how we can use linear types for Higher RAII, but the potential is so much more vast than I hinted in that interview. Read on to find out!

Some definitions:

- Wikipedia: "Linear types correspond to linear logic and ensure that objects are used exactly once."

- martriay: "the constraint that each variable can be used exactly once" (though they explain the motivations really well)

- Cornell: In linear logic, you have to “use” every premise exactly once to construct the conclusion.]

- austral-lang.org: a value of a linear type is a value that can only be used once.

One can reconcile the two definitions with enough mental acrobatics. We could say that marking a Vale struct as linear means its owning reference is linear.

- We'd define "use" as "destroy"; now we can only use the linear type once.

- Even though we can alias the struct (with a generational reference), we can't alias/clone the owning reference.

- We can still read/modify the contents of the struct, but we can't directly read/modify the owning reference's underlying pointer.

Higher RAII = Linear types + whitelisted destroyers

Recall that a linear struct must eventually be explicitly destroyed.

Now, imagine a struct that must eventually be explicitly destroyed by a specific function (or functions).

We say that struct has Higher RAII.

I'll explain that term in a bit, 2 but first, let's see an example!

Imagine a Transaction struct that must eventually be committed or rollbacked.

In today's mainstream languages, 3 the user might forget to call commit or rollback, causing the transaction to hang or make a guess. 4 This cost me a few hours the other day in my weird event collector, when I didn't commit one of my transactions.

To set up a Transaction struct with Higher RAII, we need to do three things.

First, make the struct linear.

Here's some Vale as an example. 5

linear struct Transaction {

...

}

This means the struct can never go out of scope; it can never be automatically destroyed. It must be explicitly destroyed now, such as with Vale's destruct keyword, shown below.

Second, create the functions that take the object and explicitly destruct it.

Here's our commit and rollback functions.

// (same file as struct Transaction)

func commit(txn Transaction, db &DB) {

... // code to commit the transaction

destruct txn; // Explicitly destroy

}

func rollback(txn Transaction, db &DB) {

... // code to rollback the transaction

destruct txn; // Explicitly destroy

}

Note the Transaction parameter isn't &Transaction; the functions take the instance itself, not a reference.

They then destruct the Transaction.

Third, make sure that only those functions can destroy the object.

In Vale, you don't actually have to do anything for this step: destruct can only be used from the file defining the struct.

Success! We're now guaranteed to commit or destroy every Transaction object.

More specifically, the user can't accidentally let the Transaction go out of scope, the compiler would notice and give a compile error. The only remaining option for the user is to move the object somewhere else, or destroy it via commit or rollback.

Zooming out, you can see how this struct can only be destroyed by certain explicit operations, which means we've successfully enabled Higher RAII here.

This mechanism has huge benefits if used well, for example by keeping caches consistent or enforcing we update a database, as I'll list further below.

RAII stands for "Resource Acquisition is Initialization", which is a secret key phrase that we C++ programmers whisper at the door to get into our secret covert gatherings. Careful: if they suspect you're an impostor, you'll be SFINAEd.

C++ and Rust's RAII can get halfway, but they can only implicitly call a single zero-arg void-returning function, the destructor. Some pretty amazing things happen when you can choose among multiple destructors with parameters and returns.

The most common strategy is to default to rollback, thankfully.

The HEAD version does this, stable and older versions still use the #!DeriveStructDrop keyword.

Why haven't we seen this before?

Existing languages can't quite do this, and I'll show why below.

C++

A C++ class's destructor is guaranteed to be eventually run, so if we ever want to guarantee some future action, we just put the code in an object's destructor (which is guaranteed to run when the object goes out of scope), and keep the object alive until we want that code to run.

Scott Meyers once said that "the single most important feature in the language is probably destructors, because destructors have led to the introduction of RAII." 6

RAII stands for "Resource Acquisition Is Initialization", a pattern where if you acquire a resource (such as a file descriptor), you should initialize an object with it. Then, the object can make sure to automatically release (such as with fclose) the resource when it goes out of scope. 7

Unfortunately, a C++ class can only have one destructor function, and our Transaction struct (from above) needs two.

A common half-solution is to:

- Put the above db parameter in as a class member DB* db;, since C++ destructors have no parameters. Note this isn't always possible. 8

- Have a commit method, which also sets a committed boolean to true.

- In the destructor, run rollback's logic if the committed boolean is false.

Alas, this doesn't quite solve the problem because the user still might forget to call commit where they should have.

struct Transaction {

DB* db;

bool committed = false;

...

// Don't forget to call this!

void commit() {

... // Commit to db

committed = true;

}

// Destructor

~Transaction() {

if (!committed) {

... // Rollback logic

}

}

}

He goes on to describe how RAII is a general purpose undo mechanism. I would totally agree, except for the way C++ conflated destructors with exception handling. If they didn't use the same function for those two concerns, they could tap into the potential of Higher RAII.

The hardest thing about RAII is explaining the name. Similar to monads, which are like stateful burritos.

This isn't always possible, for example if we don't know the parameter value yet at construction time. Imagine commit wanted a priority integer, only knowable from reading the database while inside the transaction.

Rust

Rust is unfortunately even less capable than C++ here.

Rust can almost do C++'s workaround, but the borrow checker generally dislikes references inside structs, such as that DB reference. 9

Rust's workarounds include either using unsafe, using Rc<RefCell<DB>>, or if one considers those unidiomatic, giving up on RAII. I prefer the second, though I've seen all three happen in the wild.

More specifically, Rust structs containing references generally work well as long as they stay within the same function. But at that point, we might as well be using defer.

RAII vs Higher RAII

Summarizing the C++ section above, RAII is the practice of putting code in destructors that you want to guarantee will eventually run.

From that context, we could say that higher RAII is when we can have multiple named destructors, destructors with parameters, destructors with return values, and we can force the user to explicitly call one of them.

Whereas RAII eventually makes us implicitly call a specific zero-arg non-returning function (the destructor), Higher RAII eventually makes us explicitly call any approved function.

"Future constraint", "future calling" perhaps? "Vow Objects" maybe? I wish "Promise" wasn't taken.

What's the difference between RAII and defer?

Go, Zig, and a few other languages have a defer statement which will run a statement at the end of the current scope (or function). 11

In RAII, we would put that code into an object's destructor, and the code will be run when the object goes out of scope.

They sound like two equivalent approaches, but RAII is more flexible: we can return the object to our caller, so the caller can decide when the destructor is run. Or, we put the object into another object, and the parent object can decide when it's run.

In short: defer can make sure something happens at the end of your function, but RAII can make sure that something happens even past the end of your function.

Now that we see the differences between Higher RAII and RAII and defer, let's see some more examples!

Zig runs a defer'd statement at the end of the scope, as god intended.

Golang is different. For some reason, even if we defer from an inner scope, the defer'd statement will run it at the end of the function. This can sometimes lead to surprising out-of-memory crashes.

Golang, I want to love you, I really do, but every time I get used to your latest nonsense you do something like this.

The Seven Arcane Uses 12 13

There are, of course, more than seven ways to use linear types and Higher RAII.

But behold! Here are the seven most useful ones that I've seen.

I call them "arcane" because they seem confusing at first, but once you know the technique, it unlocks some pretty incredible powers. Makes one feel rather... sorcerous.

Perceptive readers will notice that there's actually eight items here, but seven sounded cooler than eight, and some of them are similar to each other. Besides, everyone already knows I can't count after the last article.

Cache Invalidation

There are two hard problems in computer science: cache invalidation, naming things, and off-by-one errors.

Luckily, naming things is a solved problem.

But how can Higher RAII help with cache invalidation?

This was the topic of my 2022 article, Vale's Higher RAII, the pattern that saved me a vital 5 hours in the 7DRL Challenge.

I'll try to summarize below, but check out the article for a more in-depth explanation!

My game had two data structures:

- levelGoblins List<Goblin>

- locationToGoblinCache HashMap<Location, &Goblin>

...and they must always be in sync.

This, of course, had a risk: I could accidentally remove from the list but not the cache.

So I did two things:

- Made a linear struct GoblinInCacheToken, returned by addGoblinRefToCache, and only ever destroyed by removeGoblinRefFromCache.

- Changed levelGoblins's type to List<(Goblin, GoblinInCacheToken)>.

Now, removing from levelGoblins will give us not only the Goblin, but also the linear GoblinInCacheToken, and the only way to get rid of the GoblinInCacheToken is to hand it to removeGoblinRefFromCache.

Suddenly, we've statically guaranteed that we'll always remove from both the list and the cache!

Get the Result of a Thread or Future

C++'s std::future (and Javascript's Promise) is conceptually a little box that, at some point, will contain the result of some long-running operation.

For example, let's say that each Goblin on our level has a read-only (but expensive!) determineAction function.

In this determineAction, we want to do two things in parallel:

- Find a path to the player.

- Figure out what to shout at the player if there is no path.

That second step will involve calling an LLM on the GPU.

Our code could look like this Typescript here.

async function determineAction(

level: Level,

goblin: Goblin,

player: Player) {

// shoutPromise will eventually contain

// the result string to shout.

const shoutPromise: Promise<string> =

callGPU("taunt", goblin.personality);

// Some expensive pathfinding

const path: Location[] =

findPathAStar(

level, goblin.loc, player.loc);

// Bug here!

return new FollowPathAction(path);

}

Here, we're sending the goblin's personality array to the "shout" function on the GPU, which runs an LLM.

While that's going, we're doing some expensive pathfinding, to figure out if there's a path to the player.

Then at the end, we return what the goblin should do this turn.

But wait! There's a bug here!

We sent the request to the GPU, but forgot to actually use the result.

After many hours of debugging, we realize the problem, and change that last statement to this code.

...

if (path) {

return new FollowPathAction(path);

} else {

return new ShoutAction(

await shoutPromise);

}

}

This is where Higher RAII could have helped: that shoutPromise should have been linear, so we remember to use it.

I suspect a lot of—maybe even all—Promises should be linear.

The stakes are even higher in languages with async/await where concurrent code doesn't run until we .await, because accidentally dropped Futures can cause concurrency bugs. A linear Future solves that bug nicely.

Remember to Resolve a Future

In C++, you can give a std::future<string> to someone, with the understanding that eventually, you will put a string into it.

Hopefully you don't forget!

Or, we can use Higher RAII to make sure we remember. Here's how!

First, we make a linear type that represents "our vow to put a string into that future".

We'll call it FutureVow. 14

linear struct FutureVow<T> {

future &Future<T>;

}

Then, we would make sure that whenever we make a Future, we also make a corresponding FutureVow.

In Vale, that would mean changing Future's constructor to also return the FutureVow at the same time, 15 but one can imagine many mechanisms to ensure one is never created without the other.

Then, we add a function set which consumes our FutureVow.

func set<T>(vow FutureVow<T>, x T) {

vow.future.set(x);

destruct vow;

}

Now there's no way to get rid of a FutureVow except by calling this set function.

Success! Now it's guaranteed that we'll eventually set every Future's value. 16 17

I wanted to call it Promise, because this is literally a linear version of std::promise, but I don't want to confuse things with Javascript's Promise which is completely unrelated to this.

Not terribly difficult, just make the default constructor private and then make a new function called Future that returns a tuple.

"Guarantee" could be a strong word here. A determined programmer can get around this if they tried hard enough.

async/await also helps to prevent this bug in some cases. With async/await, we don't manually resolve a future, we return a value instead, and compilers are pretty good at preventing forgotten return statements.

However, there are times in async/await-enabled languages where we still use raw futures, such as the callGPU example above.

Prevent Zombie Temporary States

By day, my computer helps me write articles like this one.

But by night, it uses an LLM to crawl the internet searching for sufficiently weird yearly events, such as:

- Corgi Races in Minnesota. Go Logan Handsomepants!

- Flora-Bama Mullet Toss where people in Florida throw fish into Alabama!

- Outhouse Races, which is... hard to explain.

Every time it finds a possible event, it adds it to the Event list.

Then, we do some retrieval-augmented generation by googling for information about the event, analyzing each result with an LLM, and coalescing the relevant pages into a summary, updating the summary field and making it visible on the map.

Alas, this was in Python, so I had a bug: I forgot to update the summary in some cases.

This struct was forevermore stuck in its temporary summary-less state, like some sort of zombie stuck between one realm and the next. And since I only show summary'd events on the map, it took me a while to realize a lot of events were missing.

If Python had Higher RAII, I could have prevented this bug!

Here's how Vale would do it:

First, we'd split our struct into two structs, InProgressEvent and Event.

Then, we'd make a finish function to consume the InProgressEvent and return a new Event object with the new summary.

Suddenly, we're guaranteed to eventually give our InProgressEvent to finish to properly make it into an Event!

linear struct InProgressEvent {

name str;

}

struct Event {

name str;

summary str;

}

func finish(

event InProgressEvent,

summary str) {

[name] = destruct event; 18

return Event(name, summary);

}

I also could have used Higher RAII to make sure the tool always reflected its findings into the database. As it is now, the database contains six zombie in-progress events... I let them remain, to remind me why I need to translate the tool to Vale.

[name] = destruct event; is just putting event's old members into new local variables, in this case name.

In the type-state pattern, we split one struct into many, to more closely match the data's state machine diagram.

Remember to Handle Messages

Let's say you have thread A and thread B.

How does thread A ask thread B to run a certain function foo?

You're probably thinking, we should have thread A send a FooMessage of some sort to thread B, and then thread B can handle it and call foo.

That works, but what if thread B accidentally forgets to call foo, and lets the FooMessage go out of scope? We'd have a bug.

Higher RAII can help with this: we would make FooMessage linear, and make it so only foo can destroy it.

Now, if thread B receives a FooMessage, it can't forget to call foo on it.

I suspect a lot of—maybe even most—messages should be linear.

Also, if we send a linear message through a channel, the channel's receiving end needs to be linear as well. That's a good thing, because it forces us to handle all remaining messages before we drop the receiver.

This serendipitously solves some missed-message concurrency bugs that e.g. Golang channels and Tokio channels 20 are vulnerable to.

In chan.rs's Chan::drop, the while loop drains the incoming message list and just drops them on the floor. Those poor forgotten messages!

Remember to Make a Decision

In the Higher RAII explanation above, we showed how we could make a Transaction object that can only be destroyed via a commit or a rollback function.

Let's include that in our Seven Arcane Uses, and even generalize it a bit: If there is a future decision to be made, Higher RAII guarantees that we'll eventually make it.

I sometimes wish there was something like this for real life.

"Evan, think you're going to cancel that membership?"

"Don't know, still thinking about it."

"How long do you plan on thinking about it?"

"Probably until after it automatically renews."

Alas, I didn't make the decision, and so the default action was taken: my membership was renewed.

Truly, life would be better with Higher RAII. 21

No! The grimoire is compelling me to write this article and spread the curse.

Heed my words, never use Higher RAII, lest you be cursed to see all the places in other languages' code where it could have been used to prevent a bug.

Every time you see the words "remember to", "forget", or "responsible for" in comments or design discussions, you'll see another place that Higher RAII could have helped.

Even among friends you will be alone, the only one able to see the spirits of bugs prowling, knowing their inevitability yet being powerless to prevent them, limited by the technology of your time. Such is the curse. Such is our fate.

I hesitate to spread it to you, my dear readers, but I am compelled by the grimoire and am powerless to stop. I takes all my strength to merely put this side note in, hoping that someone sees it and spreads my warning.

Prevent Leaks

We all know that manually-managed-memory languages (like C) can leak memory, from forgetting to call free. We also know that reference-counted languages can leak memory, from reference cycles.

Did you know that even garbage collected languages (like Java) can have memory leaks too?

Imagine the above cache invalidation example in Java: the forgotten Goblin reference in the HashMap<Location, Goblin> locationToGoblinCache will prevent the GC from reclaiming it, effectively "leaking" it.

So we can already see how Higher RAII could help with leaks, by preventing that kind of accidental outstanding strong reference.

Of all the mainstream languages, I'd say modern C++ is the most resistant to memory leaks, and you'll see why.

Surprisingly, Rust can be more vulnerable to memory leaks than C++! I'll show you what I mean by translating a C++ Red-Black tree to idiomatic Rust, and how it introduces the risk for memory leaks.

Let's say you have this C++ Red-Black Tree node class.

template<typename T>

class RBNode {

public:

T data;

unique_ptr<RBNode<T>> left;

unique_ptr<RBNode<T>> right;

RBNode<T>* parent;

Color color;

};

This has an implicit destructor. When we delete a node, the destructor will automatically delete (and call the destructors for) its left and right children.

Now let's translate this to Rust. The borrow checker doesn't like it when a node can be pointed at by multiple places, so we'll "collectionize": we'll put all these nodes in a collection, a HashMap, and have nodes refer to each other by u64 IDs.

struct RBNode<T> {

data: T,

color: Color,

parent: Option<u64>,

left: Option<u64>,

right: Option<u64>,

};

We would store all these nodes in a HashMap<u64, RBNode<T>>, and separately keep track of which is the root node.

This is great! There's no way to use-after-free, which was a potential problem in the C++ version.

However, we've just introduced another possible bug.

Remember how when we delete a C++ node, its destructor would automatically delete the node's children?

Unfortunately, that doesn't happen anymore in the Rust version. Rust doesn't automatically remove from a hash map when we drop a u64.

This can lead to "orphaned" nodes. This memory is "leaked" until the parent Vec goes out of scope, similar to how Java can leak objects.

The problem is more widespread than we think. Just like above, Rust programs naturally tend to collectionize 22 because the borrow checker generally dislikes when multiple long-lived references point to the same object. 23

We end up with architectures where a lot of collections' structs have IDs referring to other collections' structs instead of owning things directly. The result is often similar to an entity-component-system architecture 24 or a relational database. 25

With this collectionization, the risk for this kind of "memory leak" increases.

But Higher RAII can help!

Let's pretend Rust has a linear keyword, and see what happens.

We'll make a linear OwningIndex<T> struct containing an index. The OwningIndex struct conceptually owns the RBNode its index refers to.

We also made RBNode<T> linear because only a linear struct can contain a linear struct. In fact, that Option

Finally, we make it so only one place can destroy an OwningIndex

linear struct OwningIndex<T> {

index: usize;

}

linear struct RBNode<T> {

data: T,

color: Color,

parent: Option<usize>,

left: Option<OwningIndex>,

right: Option<OwningIndex>,

};

This solves the problem! Though it can be hard to see why, so I'll try to explain:

- When we destroy a RBNode<T> instance, we're forced to take ownership of each child Option<OwningIndex> instances.

- The only way to get rid of an Option<OwningIndex> is to match it into either a (non-linear) None or a (linear) Some<OwningIndex>.

- The only way to get rid of a (linear) Some<OwningIndex> is to destruct it, taking ownership of the contained OwningIndex.

- The only way to get rid of an OwningIndex is hand it to remove_node (or, we could stuff it into another RBNode<T>).

In short, the language forces us to eventually deliver that OwningIndex to another node or to call remove_node.

See also 1vader's insightful comment about this natural collectionization and its benefits and drawbacks.

More precisely, the borrow checker generally doesn't allow us to ever modify something if anything else has a reference to it.

For more benefits and drawbacks of this natural collectionization, also check out this post on EC vs ECS in Roguelikes.

At least relational databases have ON DELETE CASCADE, which is kind of like an owning reference if you think about it.

Solve lookup-after-remove

Our final Arcane Use is to prevent a certain common logic bug.

In C++, if we don't own a Spaceship but we want to access it, we store a pointer Spaceship*. If we destroy the Spaceship, the Spaceship* becomes "dangling" and dereferencing it causes a use-after-free bug.

When we translate that code to Rust, the usual outcome is that we instead store a u64 "Spaceship ID", which is the key into a central HashMap<u64, Spaceship>. We would "dereference" the ID by calling the_hash_map.get(spaceship_id).

Unfortunately, the bug is still here, but in a different form: if we remove the Spaceship from the map, and then call get with that "dangling" u64, we receive a None which likely represents a bug. In other words, Rust turned the use-after-free into a "lookup-after-remove" bug.

Higher RAII can help with this.

Let's make a special LinearHashMap with these three adjustments:

- insert takes a key (u64) and a value (Spaceship) and returns a LinearKey which is simply a linear struct containing the key.

- get takes a &LinearKey and returns a reference to the value (&Spaceship).

- remove takes ownership of the LinearKey, destroys it, and returns the value (Spaceship).

get can return a &Spaceship instead of an Option<&Spaceship> because if the given LinearKey still exists, then we know that the Spaceship is still in the hash map.

This isn't completely immune to problems: we'd still have a bug if we give one map's LinearKey to another map, and a language that can recover from panics will have weakened guarantees here. 26 Solving these will require a little more effort. 27

This particular use case shows an ability with much larger implications, and allows a lot of spooky knowledge at a distance. 28 If you follow this curséd path, you'll eventually arrive at the nebulous "linear reference counting" concept I hinted at in the grimoire. 29

By the way, also check out Niko Matsakis's blog post on what it would look like if Rust had linear "must-move" types! 30

Specifically, some panic unwinding might destroy either the hash map or the key, causing a mismatch.

For the first problem, we can make the LinearKey bound to the map's lifetime, or we can use an assertion, or make a different type for every map, or there are a few other solutions out there.

The second problem isn't so bad, but get might want to panic if the key isn't found. I suspect there's other solutions here that could prevent this problem.

A taste: Here, some code can see that the LinearKey exists, and know that the corresponding object still exists. This technique can be used to, for example, elide generation checks and eliminate many classes of errors.

A hint: We can use the existence of a linear reference as proof that the pointee object still exists, because the object can't be destroyed until the linear references are returned to it.

See this StackOverflow answer for a clever hack to make some types linear!

Can other languages add Higher RAII?

Yes! It generally fits most cleanly into single-ownership-based languages (C++, Rust, Austral, Mojo, Carbon, CppFront, etc.), and Haskell shows us that GC'd languages could have luck too.

To walk this path, there are some steps a language has to follow.

First, let's address exceptions, panics, and async cancellation. The reason that regular RAII requires a zero-argument destructor is that an in-flight exception (or panic, or async task cancellation) will unwind the stack, destroying everything in its path. 31 To do this, it calls a zero-argument destructor or drop() function. So what does an in-flight exception do for a linear type?

The answer: we can add a separate onException function for any linear type; we don't have to conflate exception handling with destructors. This onException would take ownership of the type and attempt to correctly dispose of it. One can imagine a few other mechanisms to help with this too. 32

Exceptions are rather rude, aren't they? You're just having a nice time talking with your fellow local variables and then an exception bursts into the room and starts destroying everything.

Even though this whole scheme is a big improvement, onException would still be a zero-arg no-return-value function, which is a similar challenge as RAII's destructors. We could:

- Allow onException to return data, which is collected and processed later somehow.

- Have the thread register a onExceptedLinearStruct function which introspects the type of the given linear type, and specially handles it.

- Last resort, add it to a global list for handling later.

But even without these measures, we've still reduced the problem drastically and enabled Higher RAII, which is an improvement.

Second, when dealing with generics (like the T in List<T>), they need to take out the assumption that T has a destructor or a zero-argument drop() function.

This can require some standard library changes: a function like std::vector

In Vale, that function instead returns the popped element, so the caller can decide what to do with it.

As Aria Beingessner wrote, this is the main reason that Rust would have difficulty adding linear types: the entire existing ecosystem already assumes that it can drop given types. 33 34 The same would likely apply to C++.

Third, reconcile it with the aliasing introduced by reference counting or garbage collection. One would assume that a reference-counted type can't have Higher RAII, but that's not true: we just need a single reference to be designated as the linear reference. This is similar to how unique_ptr, constraint references (from the grimoire), and generational references work: one reference is a special "owning reference".

Fourth, figure out globals. There's a lot of potential solutions to this one, 35 for example just requiring all globals be non-linear, or requiring a destroyer function for any linear globals. 36

Fifth, send me an email, because there's a lot of interesting hidden design decisions to be made about whether a type is linear, or our usage of a type is linear.

These design decisions go straight to the original definition of linear types and affect, for example, whether linear struct literally means "a linear struct", or whether it just means "a drop()-less struct", a subtle distinction that can have quite a few benefits and tradeoffs.

I hope to write an article about this at some point, but I am compelled by unholy sorcery to complete the grimoire first.

The rest of their post is really good too. Though my experience with Vale shows me that linear-type-enabled generics are more ergonomic than they suggest, once you have the right patterns in place.

Though, ironically, there's a chance that by adding Vale's Rust FFI, I might be indirectly adding Higher RAII to Rust: I may have found a way that Vale can automatically and seamlessly call directly into Rust libraries, without user-written bindings, even though Rust doesn't have a stable ABI.

It would have some interesting constraints:

- We'd only be allowed to put non-linear Vale structs inside Rust structs.

- If we reinterpret a Rust struct as linear, we wouldn't be able to move it into a Rust function (which might drop it).

but if it works, then Vale could just reinterpret external Rust types as linear, or wrap the external Rust types in linear Vale structs. I hope to prototype this over the next several months, so stay tuned!

I'm curious about potentially requiring main to initialize and destroy globals explicitly, and call the "global constructors" for all dependencies (which would presumably then call their dependencies' global constructors and so on). Lot's of interesting benefits and drawbacks to explore on that one.

Last resort, one can just make the global an Option, empty it from inside main, and have the global's destroyer function just assert it's empty.

Higher RAII: The missing piece toward correctness

A major implication of Higher RAII is that it can bring a language's programs much closer to the ever-elusive goal of correctness.

Correctness is two things put together:

- Safety: the assurance that "bad things don't happen", for example, a panic, a crash, or a use-after-free.

- Liveness: the assurance that "good things do happen", for example a plane lowering its landing gear.

Safety helps liveness: a panic'd subsystem can't lower the landing gear. And liveness helps safety too, as we saw in our lookup-after-remove example. Like classical and techno, the two blend and build upon each other in surprising and interesting ways.

Alas, no languages have nailed liveness yet. 37

But there's hope! Linear types and Higher RAII help with liveness and correctness.

There are two languages that could really shine here: Mojo and Austral, both which use an easier form of borrowing for memory safety and speed.

Austral is using linear types today, and the Mojo folks are now looking into adding linear types to Mojo as well.

If they can harness this power well, they could bring correctness to the mainstream in a way that's simple enough to not require users to learn advanced category theory. And that's a huge deal.

I think Vale is close in this regard, but not quite there yet. One can accomplish this in Vale with move-only programming and regions, but that technique is even harder to use than borrow checking.

A lot of my nights are spent chasing a certain holy grail: blending borrow checking with Vale's existing hybrid strategy. This quest has singlehandedly led to half of the grimoire!

Software Architecture Implications

It wouldn't be a Languages ∩ Architecture blog without considering the architectural implications of a feature!

So how does Higher RAII do?

Aside from all the above powers, there's three more benefits and a drawback as well.

Benefit: Makes stateful future changes explicit

The first benefit is that Higher RAII helps our code be explicit. This is better than regular RAII which implicitly and invisibly changes the state of the program.

This is so important that one of Zig's core principles is that there should be no hidden control flow. Higher RAII means we can get RAII's benefits without that hidden control flow.

Benefit: Makes your API twice as easy to use correctly

The second extra benefit is that a function can help your future self, not just your past self.

Today's languages already make it easy to control the past: second(f) can require that you first call first(), by taking in a parameter`f` that only first returns.

However, this is one-sided. first() has no control over whether we ever call second(). We solve that with Higher RAII, by making that intermediate type linear.

In other words, Higher RAII makes it twice as easy to use your API correctly.

When working on a team, this is a major boon. You can now reach across space and time to help your teammate remember to call teardown(thing, 3, true).

Benefit: Less fear to refactor

The third benefit is that by eliminating a certain class of errors, we give ourself more freedom to refactor and improve the codebase.

I explain this nebulous concept in How To Survive Your Project's First 100,000 Lines, but I'll summarize:

- It's easier to change your program if you have tests: you can know that your change isn't breaking the program in unexpected ways.

- It's easier to refactor Java than Python, because Java's static typing means the compiler can sanity check everything you've changed.

- In Java, you can harness the type system a little bit, or a lot. Leaning on it more is generally better.

Adding higher RAII is another step in this direction: it sanity checks that your modified code actually did remember to call everything it was supposed to.

This strengthens the codebase and makes it more resilient to bugs, which means we're less afraid to do refactors and other long-term investments.

Higher RAII has the above three benefits, but it also has a drawback.

Drawback: linearity can be viral

The first drawback is that linearity is an upwardly viral constraint on our data, in that it can impose requirements on those who contain it.

For example, if a Spaceship is linear, then so is List<Spaceship>, and likely anything that contains it, such as a Shipyard. 38

This isn't necessarily a problem, unless you're adding a linear member to an existing non-linear struct: it could case an inconvenient refactor.

This drawback can be mitigated by instead defining a custom drop() for the Shipyard, if it has the necessary data to call some other function to destroy the Spaceships, but that's not always possible.

Or, if struct Outer contains linear struct Inner, then Outer will likely need to be linear as well, because Outer's auto-generated drop() function doesn't know what to do with Inner.

See Bob Nystrom's What Color is Your Function article.

Though sometimes, a normal function can still call an async function and instead put the resulting Future onto a list somewhere instead of awaiting it.

When we have a &mut in Rust, we usually need to get it via a parameter. This imposes the restriction on our caller, and all our callers' callers, that none of them have a reference to this object.

Fortunately, we can mitigate the upwardly viral spread with .clone()s or reference counting, though both can have performance implications.

That's all!

One reason I love making languages is that I get to play with and write about new techniques and share their implications with the world. I didn't realize it at the time, but I've been playing with Higher RAII since my very first article four years ago.

After using it and seeing its potential, it's become a central feature of Vale, manifesting its benefits in user-space programs, the standard library, and even some aspects of Vale's memory safety approach.

There's so much more I could write about them! But alas, this one is almost... oh wow, 19 pages. Congrats on getting to the end!

I hope you all enjoyed this post! I'm grateful to have all of you to share this arcane nonsense with. If you have any questions, always feel free to reach out via email, twitter, discord, or the subreddit.

Donations and sponsorships for Vale are currently paused, but if you like these articles, please Donate to Kākāpō Recovery and let me know! I love those birds, let's save them!

Cheers,

- Evan Ovadia

Thank you!

I want to give a huge thanks to Arthur Weagel, Kiril Mihaylov, Radek Miček, Geomitron, Chiuzon, Felix Scholz, Joseph Jaoudi, Luke Puchner-Hardman, Jonathan Zielinski, Albin Kocheril Chacko, Enrico Zschemisch, Svintooo, Tim Stack, Alon Zakai, Alec Newman, Sergey Davidoff, Ian (linuxy), Ivo Balbaert, Pierre Curto, Love Jesus, J. Ryan Stinnett, Cristian Dinu, and Florian Plattner (plus a very generous anonymous donor!) for sponsoring Vale over all these years.

Your support has always given me the strength and resolve to explore these arcane corners of the world. And even though I'm not doing sponsorships for a while, it's awesome to know you're with me in spirit. Axes high!