I've had some pretty surreal experiences while making Vale. 0 The weirdest was when I realized the hidden fundamental truth beneath a lot of the programming languages we use today.

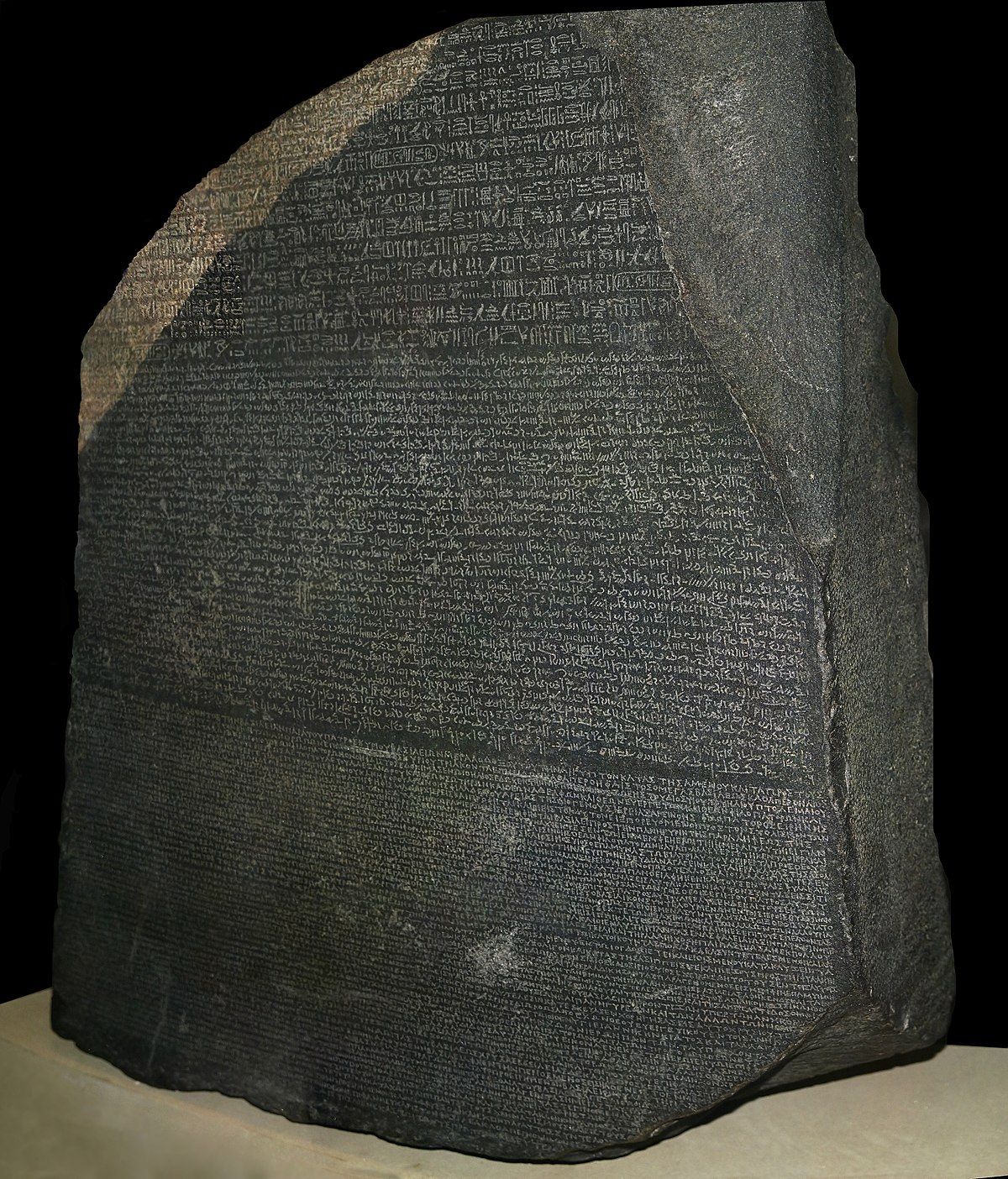

The ancient writings that lit up a new field

In July of 1799, a soldier was digging some foundations to expand a fortress in the Egyptian town of Rashid, when he stumbled upon an ancient-looking slate. He took it to his commanding officer, who realised that it could be important, or at the very least, valuable.

The slate had writings in three languages, including ancient Egyptian hieroglyphs. Nobody knew how to read hieroglyphs yet, except for some oval shapes which are known to contain the names of kings and queens.

The other two languages say the same thing as each other, and also mention those same kings and queens.

The scholar Jean-Francois Champollion used these clues to eventually decipher the entire stone, and enabled us to finally understand ancient Egyptian hieroglyphs.

I wonder what Champollion felt in that moment, when he had that entire slate translated for the first time. Probably overwhelmed and excited at the potential!

I'm no scholar, and I certainly didn't discover anything new. But I think I felt that same overwhelm and excitement when Vale showed me the hidden truths that led to the creation of our linear-aliasing model, which lets us use linear types to completely eliminate Vale's memory safety costs.

And a lot of unexpected experiences! There was one time where I dove down an ancient history rabbit hole, just so I could add some flavor to an article about memory safety.

A different way to get memory safety

Recently, I was making a sample program to show how Vale's regions can blend borrowing 1 with shared mutability.

I was surprised to find that the sample program had almost zero memory-safety overhead, except for a tiny bit in this (paraphrased) code:

func RandInt(rand &Random) i64 {

x = rand.num; // generation check, dereference

set x = x + (x / 200096i64); 2

set x = x - (x * 33554432i64);

set x = x + (x / 134217728i64);

set rand.num = x; 3 // generation check, dereference

return x;

}

// Returns a 2D array of random bools.

func MakeBoard(

rand &Random,

num_rows int,

num_cols int)

[][]bool {

rows = [][]bool(num_rows);

foreach row_i in 0..num_rows {

row = []bool(num_cols);

foreach col_i in 0..num_cols {

r = RandInt(rand);

row.push((r mod 2) == 0);

}

rows.push(row);

}

return rows;

}Specifically, when RandInt's rand.num dereferences the rand object, it does an implicit generation check assertion to ensure the object is still alive.

Normally, I don't believe in chasing zero-cost memory safety. Playing overhead golf can often lead to chasing diminishing returns or prematurely optimizing. Besides, a generation check is usually only as expensive as a bounds check (in other words, usually negligible).

But still, I wondered if it could be done. Could we get rid of that last little bit of overhead?

A few weeks later, I discovered the solution.

"Borrowing" is how we can temporarily freeze data to make accessing it much more efficient. If the compiler knows some data won't change, then it doesn't have to incur any memory safety overhead. This was popularized by Rust, and we found a way to compose it with shared mutability via regions.

The set statement, like set x = 10;, reassigns an existing variable, equivalent to C's x = 10;. Vale has a set keyword so that its declarations can be simpler, like x = 4;.

The optimizer is actually smart enough to optimize this second generation check out on its own.

Moving into and out of functions

Instead of taking a &Random reference parameter like this:

func RandInt(rand &Random) i64 {

x = rand.num;

set x = x + (x / 200096i64);

set x = x - (x * 33554432i64);

set x = x + (x / 134217728i64);

set rand.num = x;

return x;

}

...RandInt could take (and return) an owned Random, like this:

func RandInt(rand Random) (i64, Random) {

x = rand.num;

set x = x + (x / 200096i64);

set x = x - (x * 33554432i64);

set x = x + (x / 134217728i64);

set rand.num = x;

return (x, rand);

}

Here's what we changed:

- rand &Random became rand Random. Instead of taking a reference, the caller moves the actual Random instance itself.

- return x became return (x, rand); which returns a two-element tuple, which also has the Random instance.

- The return type i64 became (i64, Random) accordingly.

Now, rand.num isn't dereferencing a reference, it's accessing a field of an owned value.

Vale's compiler sees this, and doesn't insert a generation check, because any object that we own is still alive.

Of course, that requires we change how MakeBoard calls RandInt.

It goes from this:

r = RandInt(rand);where rand is a &Random reference...

...to this:

[r, set rand] = RandInt(rand);where rand is an actual Random, not just a reference.

This new line will:

- Moves the rand instance into the RandInt call.

- Uses the square braces to break apart the returned tuple into its two elements:

- The i64 part assigned to the local variable r.

- The Random part is put into the rand variable via set rand.

Here's the rest of MakeBoard, for context.

It went from this:

// Returns a 2D array of random bools.

func MakeBoard(

rand &Random,

num_rows int,

num_cols int)

[][]bool {

rows = [][]bool(num_rows);

foreach row_i in 0..num_rows {

row = []bool(num_cols);

foreach col_i in 0..num_cols {

r = RandInt(rand);

row.push((r mod 2) == 0);

}

rows.push(row);

}

return rows;

}

...to this:

// Returns a 2D array of random bools.

func MakeBoard(

rand Random,

num_rows int,

num_cols int)

([][]bool, Random) {

rows = [][]bool(num_rows);

foreach row_i in 0..num_rows {

row = []bool(num_cols);

foreach col_i in 0..num_cols {

[r, set rand] = RandInt(rand);

row.push((r mod 2) == 0);

}

rows.push(row);

}

return (rows, rand);

}

Let's break that down:

- The rand &Random parameter became rand Random, to take an actual Random instance instead of a reference.

- The return type [][]bool became ([][]bool, Random), so we can later return the Random instance.

- The [r, set rand] = RandInt(rand); line changed, as explained above.

- return (rows, rand) returns the new board and the Random instance to the caller.

Instead of passing a reference, it's like we're "lending" an owned value to a function, which then returns it to us. For now, let's call this maneuver own-lending.

If you've used a language like Rust before, this might feel familiar: own-lending is semantically equivalent to borrowing a &mut Random and passing it around.

Just for fun, I tried applying own-lending to the entire program. Sure enough, the --print_mem_overhead true flag reported that I reduced generation checks down to zero.

Wait, zero?!

This blew my mind. That shouldn't be possible. I've never seen --print_mem_overhead print zero before!

Yet there it was.

I sat back from my desk, and got up for a walk. After ten minutes, I realized I'd just been staring at a wall, and hadn't actually walked anywhere. 4

It slowly sank in over the next few days.

Using own-lending, we can completely eliminate every single generation check in a program, to get memory safety without borrow checking, reference counting, or tracing garbage collection. 5

This wall-staring happens more often than you might think. The same thing happened when I first realized that regions could also eliminate memory safety costs.

Discovering Linear Types

I imagine that Jean-Francois Champollion felt overwhelmed, discovering how to read ancient Egyptian hieroglyphs. He had found the secret to exploring an ancient culture in a way nobody ever has before.

I didn't discover anything like what Champollion did, but I certainly felt overwhelmed when I saw that zero.

And I hadn't found anything new, of course. I was just learning the natural consequence of Vale's owned values being linear types, which is a distant cousin of Rust's affine types. Both are a kind of "substructural type system". Apparently, people have been writing about substructural type systems' abilities for decades! 6

With this new understanding, I also considered Rust from a different perspective. It led me to the eventual truth: their memory safety doesn't come from borrow checking, not exactly. It comes from their types being affine. In a way, &mut references are just syntactic sugar, 7 and you can take `&mut` out of Rust and not much would change semantically.

The same is true of Vale, apparently. If you program without making any references, your program becomes memory safe, and has no memory-safety related overhead!

I'm no type theorist. I vaguely knew that Vale's owned values were linear, but I had no idea they could do so much. This came as a huge surprise to me.

There's also a very interesting language named Austral, based on linear types!

"Syntactic sugar" is what we call features that just make the code a bit cleaner, but aren't really required to express a certain computation.

Necessary techniques

In the two functions I showed above, it was pretty simple to do own-lending.

Soon, I discovered that we need some other features to support own-lending fully. In particular, a language needs:

- Struct "destructuring"

- Array insert and extract

Destructuring

We destructured a tuple in the above example, and we'd need to do it again if we want to take ownership of something from a containing struct.

Let's say that Random instance was already owned by a GameState struct like this:

struct GameState {

player_name str;

rand Random;

}

exported func main() {

game = GameState("Vast", Random(42));

// Error!

MakeBoard(game.rand);

...

}The compiler would give us an error, because taking that Random out of the GameState would leave the GameState struct in an invalid state.

Instead, we'll need to temporarily destroy the GameState and then recreate it.

Instead of writing MakeBoard(game.rand);, we would write:

[player_name, rand] = game;

set rand = MakeBoard(rand);

set game = GameState(player_name, rand);It's verbose, but it works!

A language could make this less verbose with a few different approaches, which I'll talk about further below. First, let's make this work with arrays!

Array Extract

If the Random was in an array, we can take it out using an extract method, and put it back in using an insert method.

arr []Random = …;

...

rand = arr.extract(3);

set rand = MakeBoard(rand);

arr.insert(3, rand);Depending on the implementation of extract and insert, this could be costly, up to O(n) time as it shifts all the subsequent elements by one.

There are more efficient ways to implement it. Some options:

- extract might swap the last element to this position, and insert might put it back at the end.

- We could temporarily destroy the containing array to give us ownership, similar to what we did with structs above.

- If we're using generational references like Vale is, we could temporarily change the object's generation number.

We'll talk about that last one further below.

Some Sugar 8

We could add some syntactic sugar 9 to temporarily destroy an array or a struct, so we can take ownership of the contained data.

Recall this code from above:

// Create a GameState struct

game = GameState("Vast", Random(42));

...

[name, rand] = game;

set rand = MakeBoard(rand);

set game = GameState(name, rand);

Those last three lines could conceptually become this:

borrow game.rand as rand;

set rand = MakeBoard(rand);or this alternative syntax:

rand = borrow game.rand;

set rand = MakeBoard(rand);

At the end of the scope, the language would use rand to reconstruct a GameState to put in game.

Recall the code with the array:

arr []Random = …;

...

rand = arr.extract(3);

set rand = MakeBoard(rand);

arr.insert(3, rand);

Those last three lines could conceptually become this:

borrow arr[3] as rand;

set rand = MakeBoard(rand);or this alternative syntax:

rand = borrow arr[3];

set rand = MakeBoard(rand);

And instead of a function taking and returning an owned value, we can add some syntactic sugar to do that for us.

Instead of:

func RandInt(rand Random) (i64, Random) {

x = rand.num;

set x = x + (x / 200096i64);

set x = x - (x * 33554432i64);

set x = x + (x / 134217728i64);

set rand.num = x;

return (x, rand);

}

...we could add an inout keyword:

func RandInt(rand inout Random) i64 {

x = rand.num;

set x = x + (x / 200096i64);

set x = x - (x * 33554432i64);

set x = x + (x / 134217728i64);

set rand.num = x;

return x;

}...so that it could be called like r = RandInt(borrow rand);.

This inout keyword would work exactly like Swift's or C#'s inout keyword, and like references in Val's mutable value semantics. 10 It's also similar to Rust's &mut, but this is part of the variable/parameter, not part of its type.

As a bonus, this makes it easier for the compiler to optimize out any destroying/recreating structs, or shifting around array elements. In Vale, we would just temporarily change the containing struct's/array's generation.

It's unclear whether we'll add this sugar in Vale. If you have any opinions on the matter, make an issue or join the discord server!

Everything in this section is theoretical, we haven't decided on any particular sugar to add to Vale for this.

"Syntactic sugar" is what we call features that just make the code a bit cleaner, but aren't really required to express a certain computation.

Val is a different language from Vale and Vala (we all have similarly great taste in naming!)

Benefits and Drawbacks

To get a sense of this "linear style", I like to compare it to Rust's borrow checking, because they're surprisingly similar.

They both have some benefits:

- Memory safety with no direct extra costs. 11

- Safety from data races; data races happen when two threads use references to access the same object, but we only ever allow one reference.

And they both have some drawbacks:

- We often can't have multiple references to some data (except temporarily with borrow checking 12 13).

- When we would otherwise have multiple references, we instead sometimes need to "slice" our data in unintuitive ways, which can cause some extra refactoring.

- We can't use optimal patterns like intrusive data structures and graphs which are sometimes faster in certain situations.

- We can't use certain patterns that decouple our code and make it more flexible, like observers, back-references, dependency references, callbacks, and delegates.

All of the above drawbacks exist because neither linear typing nor borrow checking can have shared mutability. In other words, multiple mutable references to the same data.

I say "direct" because it does have some indirect overhead; guideline #2 leads us to put more objects into arrays and hash maps, which leads to more bounds checking and hashing. Sometimes we also get more cache misses, though sometimes we get less.

"Shared" references in Rust parlance, and they're read-only unless we use them to get to a Cell, RefCell, Mutex, etc.

Though, in both linear typing and borrow checking, long-lived references to the same data will still need to be made into indices or IDs into a central collection which holds the data.

Addressing the Drawbacks

Are there any ways we can address those downsides without sacrificing the benefits?

In other words, is there a way we can have linear types and shared mutability?

Adding borrow checking partially helps with drawback #1 14 but it still has drawbacks #2, #3, and #4. Rust's Rc and RefCell helps with those, but they're incompatible with linear types' benefits 15, and have their own drawbacks (like forcing heap allocation and not having higher RAII).

The other solution, surprisingly, is to blend generational references back in.

Each object is still owned linearly; only one place can own an object at a time. But we'll also allow a generational reference to point at the object.

With this, we can address a lot of the drawbacks:

- We can have as many references to data as we want, though there is still only one owner.

- We don't need to restructure our program to accommodate them.

- We can use patterns like intrusive data structures and graphs. 16

- We can use observers, back-references, dependency references, callbacks, delegates, whatever we want.

- We still don't have data races, because Vale changes the generations of any objects that cross region boundaries (unless we safely avoid that with isolates).

Generational references seem to occupy a sweet spot because they allow objects to be linear (they're owned by only one place), yet allow shared mutability in a way that doesn't artificially extend the lifetime of the object.

Since we're combining linear types with aliasing, we'll call this the linear-aliasing model.

The mark of a good language is when its features compose well and don't conflict with each other. It seems we're on the right track with this model, as it composes linear types with shared mutability pretty well.

Partially because borrow checking lets us have temporary references, not persistent ones. The borrow checker has a hard time putting references into data structures.

We can't put a linear type into an Rc because Rc throws away its contents when the last alias disappears.

In Vale, we would still have all nodes owned by a central collection, but we could still have references between nodes just fine.

Matches Made in Heaven

A pleasant surprise, we're back to where we started!

But we arrive with a new understanding:

- We can code in a "linear-first" fashion, and then only add references if we want some extra flexibility.

- Alternatively, we can code with a lot of references, and then bring them down to zero where we want some more speed.

In other words, we now have complete control over the flexibility vs. performance tradeoff, something I've never seen a language do well.

It was quite a shock, realizing that Vale could do this the entire time.

And it made me wonder, what does this mean for regions, Vale's other mechanism for eliminating generation checks?

Then I realized, they fit perfectly together. We can use regions to look at a function's inputs in a zero-cost way, and use this "linear style" for any new data the function creates.

I tried this out in a little roguelike game. The vast majority of generation checks were eliminated by regions, and the rest were eliminated by this new linear style.

You can try this too! Just invoke the Vale compiler with the --print_mem_overhead true flag to make the program print out how many generation checks you can still eliminate (and if you want to use regions too, check out the experimental branch described here).

Conclusion and Next Steps

For those of us that like to squeeze every little last drop of performance out of our programs, this is a pretty exciting realization.

But ironically, that's a small minority. For the vast majority of Vale programs, generational references aren't a noticeable source of overhead, and none of this will matter. And that's a good thing; one of Vale's main strengths is that you can just make your program, and things will just work and will be fast.

However, this does open up Vale to some new domains. Since we can program in a way that doesnt require generation checks, that means that Vale could be used in new niches, like embedded systems, operating system kernels, and safety-critical software. That's pretty exciting, I think!

Thanks for reading, and I hope you enjoyed this article. If you have any questions, feel free to join the discord or subreddit or reach out via twitter!

Cheers,

- Evan Ovadia